By Elana Goldenkoff, University of Michigan and Erin A. Cech, University of Michigan

A chatbot turns hostile. A test version of a Roomba vacuum collects images of users in private situations. A Black woman is falsely identified as a suspect on the basis of facial recognition software, which tends to be less accurate at identifying women and people of color.

These incidents are not just glitches, but examples of more fundamental problems. As artificial intelligence and machine learning tools become more integrated into daily life, ethical considerations are growing, from privacy issues and race and gender biases in coding to the spread of misinformation.

The general public depends on software engineers and computer scientists to ensure these technologies are created in a safe and ethical manner. As a sociologist and doctoral candidate interested in science, technology, engineering and math education, we are currently researching how engineers in many different fields learn and understand their responsibilities to the public.

Yet our recent research, as well as that of other scholars, points to a troubling reality: The next generation of engineers often seem unprepared to grapple with the social implications of their work. What’s more, some appear apathetic about the moral dilemmas their careers may bring – just as advances in AI intensify such dilemmas.

Aware, but unprepared

As part of our ongoing research, we interviewed more than 60 electrical engineering and computer science masters students at a top engineering program in the United States. We asked students about their experiences with ethical challenges in engineering, their knowledge of ethical dilemmas in the field and how they would respond to scenarios in the future.

First, the good news: Most students recognized potential dangers of AI and expressed concern about personal privacy and the potential to cause harm – like how race and gender biases can be written into algorithms, intentionally or unintentionally.

One student, for example, expressed dismay at the environmental impact of AI, saying AI companies are using “more and more greenhouse power, [for] minimal benefits.” Others discussed concerns about where and how AIs are being applied, including for military technology and to generate falsified information and images.

When asked, however, “Do you feel equipped to respond in concerning or unethical situations?” students often said no.

“Flat out no. … It is kind of scary,” one student replied. “Do YOU know who I’m supposed to go to?”

Another was troubled by the lack of training: “I [would be] dealing with that with no experience. … Who knows how I’ll react.”

Other researchers have similarly found that many engineering students do not feel satisfied with the ethics training they do receive. Common training usually emphasizes professional codes of conduct, rather than the complex socio-technical factors underlying ethical decision-making. Research suggests that even when presented with particular scenarios or case studies, engineering students often struggle to recognize ethical dilemmas.

‘A box to check off’

Accredited engineering programs are required to “include topics related to professional and ethical responsibilities” in some capacity.

Yet ethics training is rarely emphasized in the formal curricula. A study assessing undergraduate STEM curricula in the U.S. found that coverage of ethical issues varied greatly in terms of content, amount and how seriously it is presented. Additionally, an analysis of academic literature about engineering education found that ethics is often considered nonessential training.

Many engineering faculty express dissatisfaction with students’ understanding, but report feeling pressure from engineering colleagues and students themselves to prioritize technical skills in their limited class time.

Researchers in one 2018 study interviewed over 50 engineering faculty and documented hesitancy – and sometimes even outright resistance – toward incorporating public welfare issues into their engineering classes. More than a quarter of professors they interviewed saw ethics and societal impacts as outside “real” engineering work.

About a third of students we interviewed in our ongoing research project share this seeming apathy toward ethics training, referring to ethics classes as “just a box to check off.”

“If I’m paying money to attend ethics class as an engineer, I’m going to be furious,” one said.

These attitudes sometimes extend to how students view engineers’ role in society. One interviewee in our current study, for example, said that an engineer’s “responsibility is just to create that thing, design that thing and … tell people how to use it. [Misusage] issues are not their concern.”

One of us, Erin Cech, followed a cohort of 326 engineering students from four U.S. colleges. This research, published in 2014, suggested that engineers actually became less concerned over the course of their degree about their ethical responsibilities and understanding the public consequences of technology. Following them after they left college, we found that their concerns regarding ethics did not rebound once these new graduates entered the workforce.

Joining the work world

When engineers do receive ethics training as part of their degree, it seems to work.

Along with engineering professor Cynthia Finelli, we conducted a survey of over 500 employed engineers. Engineers who received formal ethics and public welfare training in school are more likely to understand their responsibility to the public in their professional roles, and recognize the need for collective problem solving. Compared to engineers who did not receive training, they were 30% more likely to have noticed an ethical issue in their workplace and 52% more likely to have taken action.

Over a quarter of these practicing engineers reported encountering a concerning ethical situation at work. Yet approximately one-third said they have never received training in public welfare – not during their education, and not during their career.

This gap in ethics education raises serious questions about how well-prepared the next generation of engineers will be to navigate the complex ethical landscape of their field, especially when it comes to AI.

To be sure, the burden of watching out for public welfare is not shouldered by engineers, designers and programmers alone. Companies and legislators share the responsibility.

But the people who are designing, testing and fine-tuning this technology are the public’s first line of defense. We believe educational programs owe it to them – and the rest of us – to take this training seriously.![]()

About the Author:

Elana Goldenkoff, Doctoral Candidate in Movement Science, University of Michigan and Erin A. Cech, Associate Professor of Sociology, University of Michigan

This article is republished from The Conversation under a Creative Commons license. Read the original article.

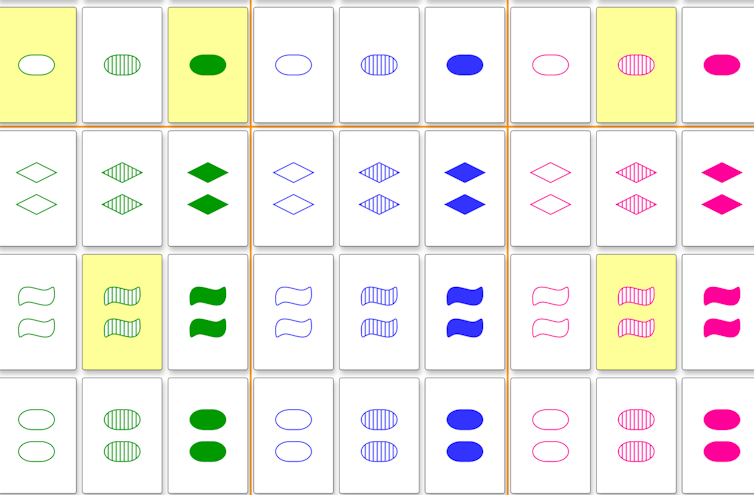

Can you find a matching set?

Can you find a matching set?

An autonomous race car built by the Technical University of Munich prepares to pass the University of Virginia’s entrant.

An autonomous race car built by the Technical University of Munich prepares to pass the University of Virginia’s entrant.